Introduction to Large Language Models (LLMs)

- Archishman Bandyopadhyay

- Jun 15, 2023

- 2 min read

1. Overview of Large Language Models

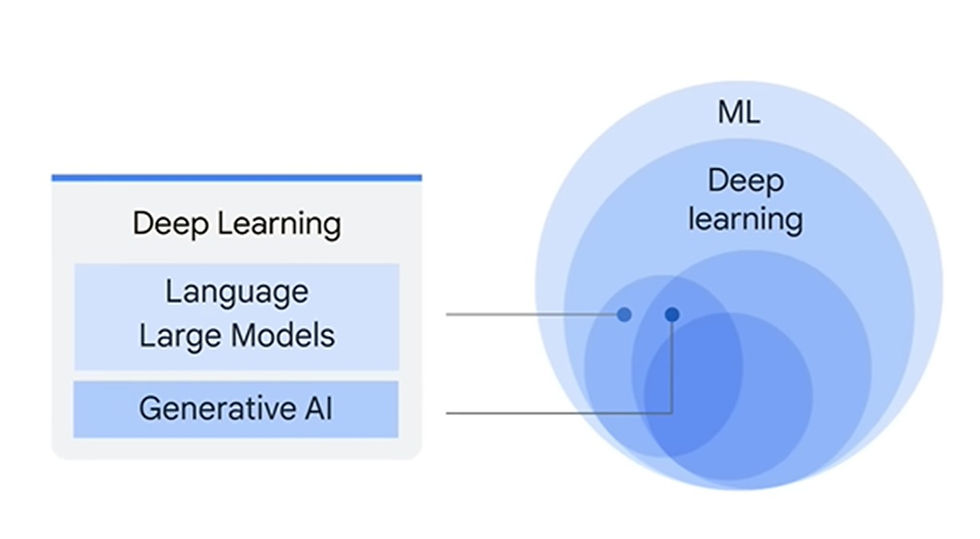

Large language models (LLMs) are a subset of deep learning.

LLMs intersect with generative AI and are part of deep learning.

Generative AI can produce new content, including text, images, audio, and synthetic data.

LLMs are large general-purpose language models that can be pre-trained and fine-tuned for specific purposes.

Pre-training involves training the model for general language problems, while fine-tuning tailors the model for specific tasks in different fields.

2. Key Features of Large Language Models

Large indicates the enormous size of the training data set and the parameter count.

General purpose means the models can solve common language problems across industries.

Pre-trained and fine-tuned refers to the process of initially training the model on a large data set and then customizing it for specific tasks using a smaller data set.

3. Benefits of Using Large Language Models

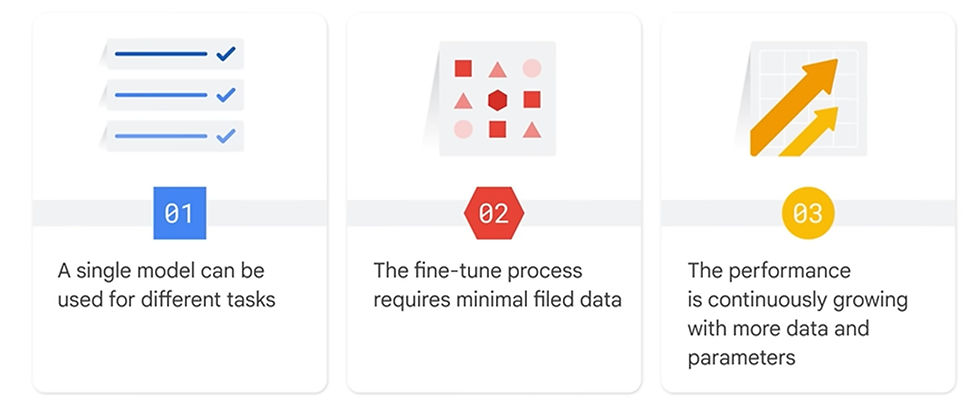

Single model versatility: A single model can be used for various tasks such as language translation, text generation, and question answering.

Minimal field training data: Large language models can achieve decent performance even with limited domain-specific training data.

Continuous improvement: The performance of large language models improves as more data and parameters are added.

4. Comparison with Traditional Machine Learning Development

LLM development doesn't require expertise, extensive training examples, or model training. Prompt design plays a crucial role.

Traditional machine learning development requires training examples, compute time, and hardware.

5. Text Generation Use Case: Question Answering

Question answering (QA) is a subfield of natural language processing.

QA systems trained on text and code can answer factual, definitional, and opinion-based questions.

LLMs can generate free text responses based on the context, eliminating the need for domain-specific knowledge.

6. Prompt Design and Prompt Engineering

Prompt design involves creating a clear, concise, and informative prompt tailored to a specific task.

Prompt engineering focuses on improving performance by using domain-specific knowledge, providing desired output examples, or employing effective keywords.

Prompt design is essential, while prompt engineering enhances accuracy and performance.

7. Types of Large Language Models

Generic language models predict the next word based on training data and can be used for autocompletion.

Instruction-tuned models predict responses based on given instructions.

Dialogue-tuned models specialize in responding to questions in a conversational manner.

8. Chain of Thought Reasoning

Models are more likely to give the correct answer when they first output text that explains the reason behind the answer.

9. Task-Specific Tuning and Parameter-Efficient Tuning

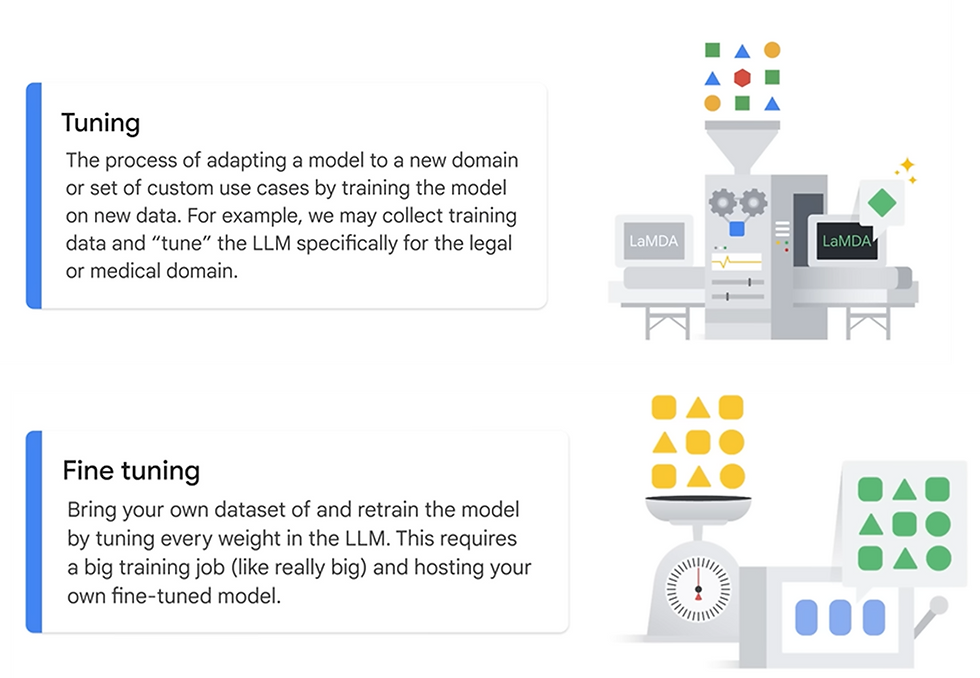

Task-specific tuning allows customization of the model's response based on examples of the desired task.

Fine tuning involves training the model on new data and adjusting all model weights.

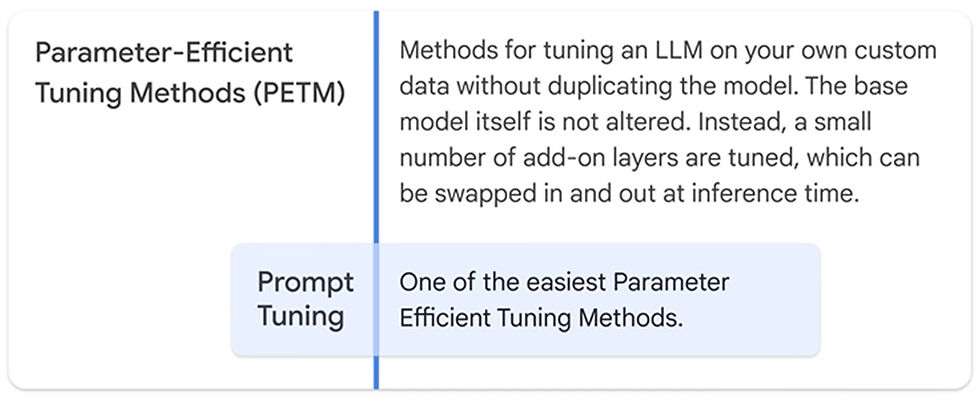

Parameter-efficient tuning methods (PETM) tune add-on layers without altering the base model, enabling customization without duplicating the model.

10. Generative AI Studio and App Builder

Generative AI Studio provides tools and resources to explore and customize generative AI models, including pre-trained models, fine-tuning tools, and deployment options.

Gen AI App Builder allows the creation of Gen AI apps without coding, using a drag-and-drop interface, visual editor, and conversational AI engine.

11. PaLM API and Maker Suite

PaLM API allows testing and experimentation with Google's large language models and Gen AI tools.

Maker Suite integrates with PaLM API, offering a graphical user interface for accessing the API, model training, deployment, and monitoring tools.

Check out the complete video lecture here :

Did you find this useful ?

Yes

No

Comments